Everyone’s talking about AI agents. Almost no one should be building them yet.

Everyone’s talking about AI agents.

Autonomous systems. Multi-step reasoning. The future of work.

And I get it. The demos are compelling. The vision is exciting.

But here’s what nobody’s saying: if you can’t prove value from a simple AI workflow in 7 days, building an agent isn’t going to save you.

It’s going to bury you.

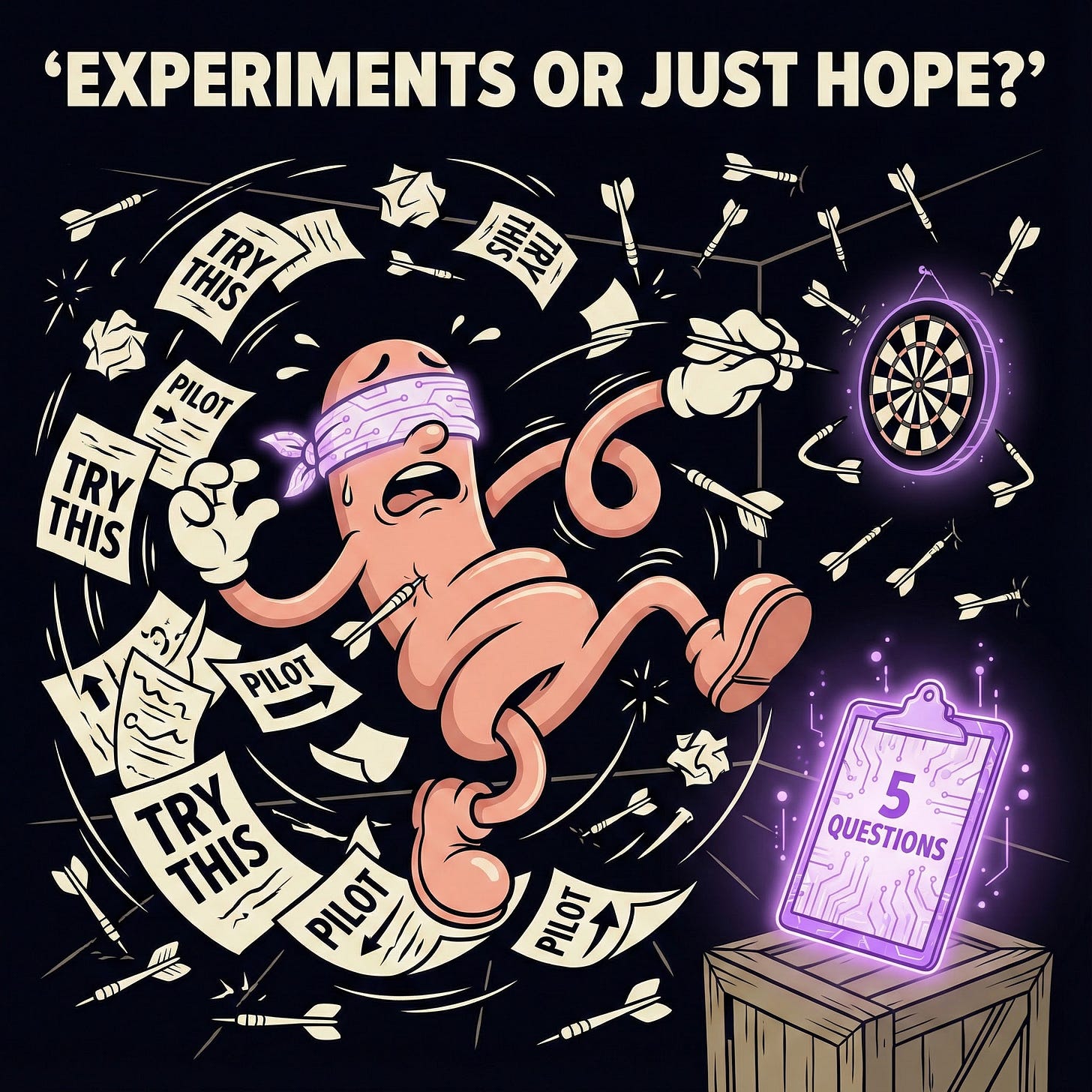

The Experimentation Trap

I’ve watched this pattern repeat across dozens of companies:

A team gets excited about AI. They launch a “pilot.” Six months later, they still can’t tell you if it worked.

Not because the AI failed. Because they never defined what success looked like.

They weren’t running experiments. They were just trying things.

And here’s the uncomfortable truth: trying things isn’t the same as proving things.

Experimentation is a scientific word. Most companies aren’t using it scientifically.

What the Data Actually Shows

MIT just released a study that should terrify every executive investing in AI:

Only 5% of AI pilots achieve rapid revenue acceleration.

Think about that. Ninety-five percent are stalling out. Not because the technology doesn’t work, because companies can’t connect the activity to the outcome.

It gets worse:

- 46% of AI proof-of-concepts get scrapped before production (S&P Global Market Intelligence, 2025)

- 30% of GenAI projects will be abandoned after POC by end of 2025 (Gartner)

- Companies that do make it to production? It takes them an average of 8 months to get there (Gartner)

This isn’t a technology problem. It’s a methodology problem.

Companies are launching pilots in “safe sandboxes” with no clear path to deployment. The tech works in isolation, but when it’s time to go live, they hit a wall: integration, compliance, training, measurement.

Gartner calls it “pilot paralysis.”

I call it what happens when you skip the groundwork.

Experimentation vs. Trying Things

Here’s the difference:

Trying things looks like this:

- “Let’s give the sales team access to ChatGPT and see what happens.”

- “We’ll pilot this AI assistant for 3 months and evaluate.”

- “Let’s experiment with AI-generated content.”

Proving things looks like this:

- “We believe AI-assisted email drafts will reduce response time by 40%. We’ll measure current baseline (2.5 hours average), test for 7 days, and evaluate against our decision criteria: if response time drops below 1.5 hours, we deploy company-wide.”

See the difference?

One is hope. The other is science.

And science requires structure.

The 5 Questions That Turn Random Trying Into Real Experimentation

If you can’t answer these five questions before you start, you’re not experimenting—you’re just burning time:

1. HYPOTHESIS: What do you believe will improve?

Not “let’s see what happens.” What specifically do you think will get better?

- Faster report creation?

- Fewer customer support emails?

- Higher proposal win rates?

Name it. Make it concrete.

2. METRIC: How will you measure it?

“Productivity” isn’t a metric. “Time to first draft” is.

If you can’t measure it, you can’t prove it. And if you can’t prove it, you can’t scale it.

3. BASELINE: What’s the current state?

You can’t know if something improved if you don’t know where you started.

How long does the task take now? How many errors occur? What’s the current cost?

Measure it. Before you change anything.

4. TIMEFRAME: How long will you test?

Here’s the thing: 7 days beats 7 months.

Most AI experiments don’t need months. They need days. A focused week with clear measurement tells you everything you need to know.

If it doesn’t show value in 7 days, extending it to 90 won’t save it.

5. DECISION CRITERIA: What makes this a success?

This is where most pilots die. Nobody decides upfront what “good enough to deploy” actually means.

- 20% time savings? 40%? 60%?

- 90% accuracy? 95%?

- Positive user feedback from 70% of testers?

Set the threshold. Before you start. Then stick to it.

The PhD Trap: Why Overthinking Kills Momentum

I’ve seen teams spend 3 months designing the “perfect experiment.”

They debate variables. They map dependencies. They build elaborate measurement systems.

And by the time they’re ready to start, the business has moved on.

Here’s what they miss: you don’t need a perfect experiment. You need a clear one.

The 5 questions above? You can answer them in 30 minutes.

Then you run it. For a week. And you know.

Speed without sloppiness. That’s the balance.

Don’t overthink the experiment design. Just make sure you have one.

Why 7 Days Beats 7 Months

Every company I work with asks: “How long should we pilot this?”

My answer: As short as possible while still being valid.

For most AI workflows, that’s 7 days. Maybe 14 if you need statistical significance.

And this is why short timeframes work:

1. Urgency forces clarity

When you only have a week, you can’t afford vague goals. You have to define success upfront.

2. Feedback loops are faster

Problems surface immediately. You fix them in real-time instead of discovering them in month 5.

3. The opportunity cost is lower

If it doesn’t work, you’ve lost a week—not a quarter.

4. Momentum doesn’t die

Three-month pilots lose steam. People forget why they started. Priorities shift.

A week? Everyone stays focused.

The Real Cost of Skipping This

Let’s do the math:

- Average time from POC to production: 8 months (for projects that make it)

- Average percentage that make it: 54% (industry average)

- Cost of a failed AI pilot: $4M - $20M (Gartner)

Now imagine a different path:

- Week 1: Run structured 7-day experiment with 5-question framework

- Week 2: If it works, deploy. If it doesn’t, kill it or iterate.

- Week 3: Start the next one.

By the time a traditional pilot finishes planning, you’ve already run 12 experiments and deployed the 3 that worked.

That’s the power of structured speed.

What This Means for AI Agents (And Why You’re Not Ready)

Back to where we started: AI agents.

Agents are powerful. Autonomous. Multi-step. Exciting.

They’re also the hardest form of AI to deploy successfully.

If you can’t structure a simple experiment around a single AI task, like “draft email replies” or “summarize meeting notes”, you have no business building an agent.

Because agents require:

- Clear success criteria (you don’t have those yet)

- Reliable measurement systems (you haven’t built them)

- Failure recovery processes (you haven’t tested them)

- User trust (you haven’t earned it)

All of that comes from the groundwork: small, structured, proven experiments.

Master the basics. Then build the agents.

Not the other way around.

The Quick Win Protocol: From Random Trying to Structured Proving

I built a framework for this. It’s called the AI Quick Win Protocol.

One page. Five questions. The exact structure you need to turn random experimentation into provable value.

Here’s what it includes:

The 5 Core Questions:

1. Hypothesis (what will improve?)

2. Metric (how will you measure?)

3. Baseline (what’s the current state?)

4. Timeframe (how long will you test?)

5. Decision criteria (what defines success?)

Plus the Leverage Question:

“What will we do with the freed capacity?”

Because here’s the thing: AI doesn’t just save time. It creates space.

If you don’t plan what to do with that space, it’ll just get filled with more tasks.

And you’ll be back where you started—busier than ever, wondering why AI didn’t help.

How to Get It

Comment “QUICKWIN” and I’ll send you the framework.

Use it this week. Pick one AI workflow. Answer the 5 questions. Run the experiment.

Seven days from now, you’ll know if it works.

And if it does, you’ll have proof. Not hope. Not hype.

Proof.

That’s how you move from pilot paralysis to deployment velocity.

That’s how you end up in the 5%, not the 95%.

One More Thing: Know Your Baseline

Structured experimentation starts with knowing your baseline.

Not just for individual tasks—for your entire organization’s AI readiness.

Where are you actually starting from? What’s mature? What’s missing?

I teamed up with the AI Maturity Index, building a maturity check to answer exactly that.

It’s a 10-minute assessment that gives you a clear picture of where you are—and where the gaps are.

Because you can’t improve what you can’t measure.

And you can’t measure what you haven’t baselined.

Damian Nomura helps companies adopt AI through consulting, advisory, and hands-on implementation. His approach is human-centered, focusing on fast value creation while building sustainable leadership practices. If your AI pilots keep stalling—or you’re not sure where to start—let’s talk.

Simple. Clear. Applicable.